Forefront research, during its natural evolution, produces some potential cornerstones that, at the end of the game, can prove to be plainly wrong. When one of these cornerstones happens to form, even if no sound confirmation at hand is available, it can make life of researchers really hard. It can be hard time to get papers published when an opposite thesis is supported. All this without any certainty of this cornerstone being a truth. You can ask to all people that at the beginning proposed the now dubbed “decoupling solution” for propagators of Yang-Mills theory in the Landau gauge and all of them will tell you how difficult was to get their papers go through in the peer-review system. The solution that at that moment was generally believed the right one, the now dubbed “scaling solution”, convinced a large part of the community that it was the one of choice. All this without any strong support from experiment, lattice or a rigorous mathematical derivation. This kind of behavior is quite old in a scientific community and never changed since the very beginning of science. Generally, if one is lucky enough things go straight and scientific truth is rapidly acquired otherwise this behavior produces delays and impediments for respectable researchers and a serious difficulty to get an understanding of the solution of a fundamental question.

Maybe, the most famous case of this kind of behavior was with the discovery by Tsung-Dao Lee and Chen-Ning Yang of parity violation in weak interactions on 1956. At that time, it was generally believed that parity should have been an untouchable principle of physics. Who believed so was proven wrong shortly after Lee and Yang’s paper. For the propagators in the Landau gauge in a Yang-Mills theory, recent lattice computations to huge volumes showed that the scaling solution never appears at dimensions greater than two. Rather, the right scenario seems to be provided by the decoupling solution. In this scenario, the gluon propagator is a Yukawa-like propagator in deep infrared or a sum of them. There is a very compelling reason to have such a kind of propagators in a strongly coupled regime and the reason is that the low energy limit recovers a Nambu-Jona-Lasinio model that provides a very fine description of strong interactions at lower energies.

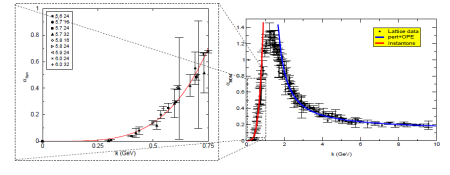

From a physical standpoint, what does it mean a Yukawa or a sum of Yukawa propagators? This has a dramatic meaning for the running coupling: The theory is just trivial in the infrared limit. The decoupling solution just says this as emerged from lattice computations (see here)

What really matters here is the way one defines the running coupling in the deep infrared. This definition must be consistent. Indeed, one can think of a different definition (see here) working things out using instantons and one see the following

One can see that, independently from the definition, the coupling runs to zero in the deep infrared marking the property of a trivial theory. This idea appears currently difficult to digest by the community as a conventional wisdom formed that Yang-Mills theory should have a non-trivial fixed point in the infrared limit. There is no evidence whatsoever for this and Nature does not provide any example of pure Yang-Mills theory that appears always interacting with Fermions instead. Lattice data say the contrary as we have seen but a general belief is enough to make hard the life of researchers trying to pursue such a view. It is interesting to note that some theoretical frameworks need a non-trivial infrared fixed point for Yang-Mills theory otherwise they will crumble down.

One can see that, independently from the definition, the coupling runs to zero in the deep infrared marking the property of a trivial theory. This idea appears currently difficult to digest by the community as a conventional wisdom formed that Yang-Mills theory should have a non-trivial fixed point in the infrared limit. There is no evidence whatsoever for this and Nature does not provide any example of pure Yang-Mills theory that appears always interacting with Fermions instead. Lattice data say the contrary as we have seen but a general belief is enough to make hard the life of researchers trying to pursue such a view. It is interesting to note that some theoretical frameworks need a non-trivial infrared fixed point for Yang-Mills theory otherwise they will crumble down.

But from a theoretical standpoint, what is the right approach to derive the behavior of the running coupling for a Yang-Mills theory? The answer is quite straightforward: Any consistent theoretical framework for Yang-Mills theory should be able to get the beta function in the deep infrared. From beta function one has immediately the right behavior of the running coupling. But in order to get it, one should be able to work out the Callan-Symanzik equation for the gluon propagator. So far, this is explicitly given in my papers (see here and refs. therein) as I am able to obtain the behavior of the mass gap as a function of the coupling. The relation between the mass gap and the coupling produces the scaling of the beta function in the Callan-Symanzik equation. Any serious attempt to understand Yang-Mills theory in the low-energy limit should provide this connection. Otherwise it is not mathematics but just heuristic with a lot of parameters to be fixed.

The final consideration after this discussion is that conventional wisdom in science should be always challenged when no sound foundations are given for it to hold. In a review process, as an editorial practice, referees should be asked to check this before to kill good works on shaky grounds.

I. L. Bogolubsky, E. -M. Ilgenfritz, M. Müller-Preussker, & A. Sternbeck (2009). Lattice gluodynamics computation of Landau-gauge Green’s functions in the deep infrared Phys.Lett.B676:69-73,2009 arXiv: 0901.0736v3

Ph. Boucaud, F. De Soto, A. Le Yaouanc, J. P. Leroy, J. Micheli, H. Moutarde, O. Pène, & J. Rodríguez-Quintero (2002). The strong coupling constant at small momentum as an instanton detector JHEP 0304:005,2003 arXiv: hep-ph/0212192v1

Marco Frasca (2010). Mapping theorem and Green functions in Yang-Mills theory PoS FacesQCD:039,2010 arXiv: 1011.3643v3

Let’s see if I have this right. I think I have comprehended your post correctly, but olease correct me if I have misunderstood your presentation that I set forth in points (1)-(7) below. I then have a few other questions.

(1) The strength of the strong force coupling constant of QCD “runs” with the energy level of the interaction in the following manner: (a) first, rises from a low or zero value as the energy scale of the interaction increases to a peak on the order of a dimensionless constant with a value of about 1.25 at an energy level on the order of 200 MeV (the numbers of eyeballed from your first graph) and (b) then it declines quite rapidly immediately after the peak but eventually more gradually on up to infinity, in accordance with whatever the correct form of the beta function that describes the running of the strong force coupling constant of QCD may be.

(2) The strength of the strong force coupling constant of QCD actually tends towards zero in the limit as the energy scale of the interaction approaches zero. A strong force coupling constant that runs in this way at low energy levels is called the “decoupling solution.” You have successfully developed the details of how this happens, mostly using lattice methods.

(3) The correct result in (2) is contrary to the old conventional wisdom which was that the strong force coupling constant of QCD tended towards a non-zero minimum in the limit as the energy scale of the interaction approaches zero. A strong force coupling constant that runs according to the old conventional wisdom is called a “scaling solution.”

(4) This ruins the day of some QCD theorists, because “some theoretical frameworks” need a scaling solution to be valid. I’d be curious to know which theoretical frameworks in particular are undermined by a trivial infrared fixed point in QCD.

(5) It sucks to try to publish scientific papers that run against conventional wisdom, even if you know you will probably be proven right in the end.

(6) Science has always been like this and always will be like this because scientists being human, jump on the bandwagon of new theories that make sense, even if they haven’t been rigorously proven until strong evidence overcomes the new theories.

(7) The peer review process should recognize this bias and given otherwise legitmate scientists challenging the scientific orthodoxy the benefit of the doubt and evaluate the merits of the underdog’s theories based on the facts.

A few related questions:

Is it true that under your analysis in the decoupling solution that the strong force coupling constant, in interactions at energy levels in excess of the 200 MeV +/- where it is at its peak, declines at a rate more or less in accord with traditional calculations of the beta function that describes the running of the strong force coupling constant of QCD (as illustrated by the second graph)? In other words, there are no indications that the beta function that governs the running of the strong force coupling constant of QCD is significantly wrong in the ultraviolet limit?

As I understand it, the advocates of the decoupling solution and the advocates of the scaling solution both agree on the exact terms of the Yang-Mills equations of Standard Model QCD, and within reason on the values of the physical constants in that equation to the extent that they have been determined by experiment and are available via sources like the Particle Data Group. The problem, as I understand it, is that the math in the exact Yang-Mills equations outside highly specialized circumstances that simplify them dramatically, is simply too hard to calculate analytically and exactly, although arbitrarily precise and accurate approximations of the exact equations can be done using Lattice Methods with sufficient computational power and clever computer programming. So, in QCD different theorists propose different equations to approximate the exact questions and then test them against numerical Lattice approximations which are valid tests as long as the exact Yang-Mills equations of QCD are correct.

Am I correct in understanding from you that the dispute between the decoupling solution advocates and the scaling solution advocates is over what formulas produce the most correct and precise approximations of the exact QCD equations of the SM? Or am I wrong — i.e. is there a dispute over the terms of the exact Yang-Mill equations of QCD?

How strongly do experimental results constrain lattice method approximations in QCD? Given the lack of precision of key constants like quark masses, I have to think that the inputs into lattice method approximations can’t be all that precisely known.

Off topic, but inspired by your presentation, what do you think of the 2008, 2009 and 2010 papers by I.M. Suslov which are cited by Wikipedia’s Landau pole article (I. M. Suslov, JETP 107, 413 (2008); JETP 111, 450 (2010); http://arxiv.org/abs/1010.4081, http://arxiv.org/abs/1010.4317; I. M. Suslov, JETP 108, 980 (2009), http://arxiv.org/abs/0804.2650), that suggests that a tweak to the usually used QED beta function that the author derives from more fundamental considerations? Suslov claims that this QED beta function tweak prevents a Landau pole from arising in QED at high energies and extremely short distances. Does what Suslov is doing make sense? A gave them a quick read and so nothing glaringly wrong with them.

Wouldn’t a beta function that eliminates the Landau pole in extremely short distance QED resolve one of the theoretical inconsistencies between QED and GR by preventing one class of GR singularities from arising as a consequence of the point-like nature of SM particles and the energies created by their short distance interactions?

But, I am surprised that a break through of the magnitude claimed by Suslov, even if the QED Landau pole is only of academic interest since it arises so profoundly far above even the TOE scale, wouldn’t receive more publicity and recognition. After all, this would seem to have important implications for unification schemes and might undermined some of the need for BSM physics. In particular, it might impact the rhetorically powerful convergence of the three running coupling constant graphs used to argue for SUSY or another GUT over the SM. Do you see any potential weakness with Suslov’s approach?

If Suslov is on the right track, what implications do you think Suslov’s readjustment of the ultraviolet running of the fine structure constant (and presumably the running of the coupling constant of the weak force by analogy), has for the convergence of the three coupling constants in GUT theories, if any?

Dear ohwilleke,

Sorry, but there is a flaw in your arguing. My analysis applies to a pure Yang-Mills theory. QCD with quarks has a non-trivial infrared fixed point. You should not worry about this because authoritative colleagues of mine do the same confusion.

Beside this, let me explain what people means by scaling and decoupling solution. These refer to propagators for a pure Yang-Mills theory in the Landau gauge. Please, note that I have specified the gauge as propagators are gauge-dependent quantities. The reason to choose this particular gauge is that the equations become more tractable and their solution on lattice is easier. Initially people thought that the gluon propagator should have to go to zero lowering momenta. In this scenario, the now dubbed scaling solution, the ghost propagator was going to infinity faster than a free particle propagator and the running coupling reaches a non-trivial fixed point in agreement with conventional wisdom. This scenario held for several years because the computational power was not enough to achieve sufficiently large volumes. So, it formed a kind of paradigm and it was really difficult for people presenting a different view to get their papers published. On 2007 computers were powerful enough to reach volumes as large as (27 fm)^4, a huge volume indeed. Computations showed that the scaling solution never appeared for dimension greater than 2 (at d=2 is there) but rather the gluon propagator reached a finite non-zero value at zero momenta, a behavior reminiscent of a Yukawa propagator or a sum of them. This was a real breakthrough for the community but the idea that a scaling solution must be there in any case persists. Indeed, today we know that this solution is rather peculiar and emerges only when one fixes the coupling to a finite non-zero value at zero momenta. But this produces an instability. Please, note that this is not yet QCD as we have ignored quarks. The stake is high anyhow as to know the form of the gluon propagator in the infrared limit could give an important track toward the low-energy limit of QCD as was also devised in the ’80s.

As this example shows, there is an elementary psychological mechanism at work that is not too much rational: A paradigm forms even if there is no sound reason to support it. This mechanism appears when some strong group, internal to a given community (let call them “the proponents”), is able to wield enough power to let their idea emerge against all others, even better ones. In order to get such a hurdle removed, a breakthrough must happen and this could require a very long time with a consequential lost in time and resources. Let me say that this is a general damage for a lot of people. A rational community should not let vested interests overcome the need to reach truth as this is the main aim of the scientific enterprise and has costs in resources and money. But editors and referees are part of the game and so all the procedure can be deeply biased and one cannot avoid that this effect takes place over and over again.

About Suslov’s work, I have to say that you cannot spot any mistake there simply because that work is correct and is in perfect agreement with mine (read my paper that I mention in the post). I have discussed this here. It is another way to prove that a scalar field theory is trivial in the infrared limit, a fact that has been proven true by Michael Aizenman for d>4 but the proof for d=4 escaped so far. I mean until the papers by me and Suslov appeared. But the triviality of this theory applies as well to a pure Yang-Mills theory having it a very similar kind of nonlinearity. Indeed, in the Landau gauge, they share identical solutions representing instantons. My view is that Landau pole does not exist but is just an artifact of perturbation theory. One can only use it as an indicator that a theory could display triviality.

Marco

Macro,

Thank you so much for your detailed answers. I had not caught the pure Yang-Mills v. QCD distinction and appreciate you bringing it to my attention. Also, I appreciated your explanation of the links between your work, Suslov and aizenman’s.

“On 2007 computers were powerful enough to reach volumes as large as (27 fm)^4” How much power do they have now, five years later?

With CUDA entering the game, assembling computers able to perform at Petaflops order of magnitude can be afforded by most institutions. But that volume was reached with a mainframe in Brazil working at Teraflops.